With the help of Microsoft’s AI.lab and Azure, a new app called Sketch2Code takes whiteboard sketches and turns them into working code. As noted in a post on the Azure blog, Sketch2Code deciphers images taken of whiteboard sketches and is able to turn the images into valid HTML markup code.

Here’s how Sketch2Code processes images into code:

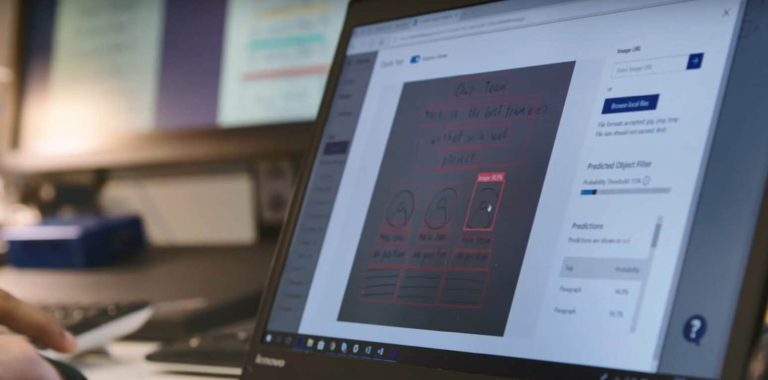

- User uploads an image through the website.

- A custom vision model predicts what HTML elements are present in the image and their location.

- A handwritten text recognition service reads the text inside the predicted elements.

- A layout algorithm uses the spatial information from all the bounding boxes of the predicted elements to generate a grid structure that accommodates all.

- An HTML generation engine uses all these pieces of information to generate an HTML markup code reflecting the result.

Sketch2Code uses the following Microsoft AI and Azure resources:

- A Microsoft Custom Vision Model: This model has been trained with images of different handwritten designs tagging the information of most common HTML elements like buttons, text box, and images.

- A Microsoft Computer Vision Service: To identify the text written into a design element a Computer Vision Service is used.

- An Azure Blob Storage: All steps involved in the HTML generation process are stored, including the original image, prediction results and layout grouping information.

- An Azure Function: Serves as the backend entry point that coordinates the generation process by interacting with all the services.

- An Azure website: User font-end to enable uploading a new design and see the generated HTML results.

Sketch2Code is developed in collaboration with Kabel and Spike Techniques. You can find all the resources for Sketch2Code on Github.