There is an exhaustive cliché used in the aftermath of most Apple hardware keynotes, reverently referred to as the Apple Reality Distortion Field. In the case of the company’s new mixed reality Vision Pro headset announced at WWDC this year, Apple has quite literally created its own reality distortion field and wants developers to do something they haven’t been able to do for decades in augmented and virtual reality; and that’s give most people a reason to use it beyond demos.

At the close of a two-hour long opening keynote at its World-Wide Developer Conference, Apple CEO Tim Cook drew upon the 24-year-old tech trick the company started by using the “one-more-thing” line to introduce its new mixed reality headset, the Vision Pro.

A lineup of Apple executives took to the digital stage to extol the various virtues of yet another AR/VR headset entering the sector that included demos of the customary theater simulation, floating windows of applications, eye and hand gesture recognition, gaming, and of course office productivity.

To Apple’s credit, it has seemingly unloaded its wallet to include the most advance tech accessible now, and packed the Vision Pro with six atmospheric cameras, three LiDAR scanning modules, two-downward facing cameras, a second chip to process all camera activity, custom micro-OLED displays resulting in 4K resolution per eye or 23 million pixels, an array of IR cameras and LED illuminators for eye tracking, an additional expensive outer display, and built in speakers.

However, one of the areas the Vision Pro’s reality distortion field falls short is in the demos and use cases highlighted by Apple during the keynote, as well as recanted first-hand experiences by tech journalists who spent brief time with the device.

While the hardware is perhaps the sleekest VR/AR headset yet, almost nothing Apple demoed during its 30-minute presentation felt new or made more sense than what’s predated its arrival. The idea of immersive theatrical movie viewing is a staple of most VR headsets since the screen can be placed inches from the users’ eyes, and much of the outside world is isolated.

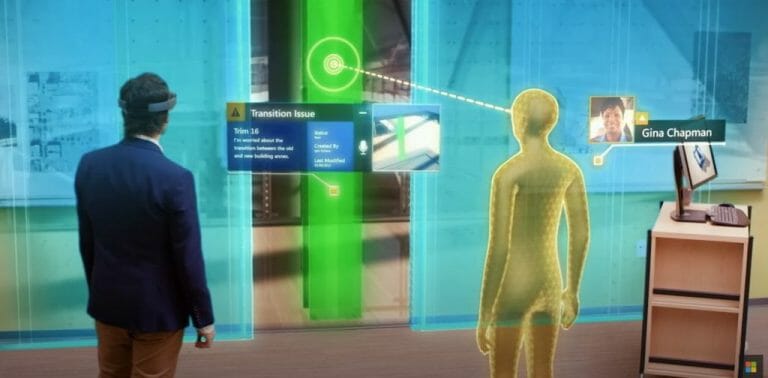

The idea that we can litter our current, unobstructed, view of the world with digital image overlays of application windows is another standard demo from every AR headset including Magic Leap, HoloLens, HTC VIVE, Lenovo ThinkReality, and Rokid Max. Computer generated elements breaking 3d space is also a staple demo that’s become such table stakes that Apple’s given up showcasing it as part of its AR Kit updates to iOS and iPadOS.

Put side by side, the productivity demo is almost beat for beat what Microsoft showed off seven years ago with HoloLens and to a smaller extent what Meta hopes to accomplish with its own Metaverse take, so much so that Microsoft Office support was highlighted by Apple.

The idea of taking conference calls, triaging productivity apps in 3d space and browsing the web are not only old concepts, but continually prove to be less efficient than the current combo of laptops, Bluetooth keyboards and mice, and monitors we currently use, as evident by the lackluster appearances of headsets in the office.

The fact that most AR headsets become single-use and specialized application tools is yet another reminder that developers have hit a wall as to how to blend multitasking experiences into AR and VR experiences without overloading the user.

My point is, beyond the oohs and ahhs of a new piece of tech from Apple, there have been few critically thought out takes on what Apple’s vision is for the Vision Pro, pun intended. Similar to the Apple Watch, Apple has flooded the zone with a greatest hits of demos already done by previous AR and VR headset makers.

What Apple has successfully done is given the YouTube journalist crowd new marketing jargon to recite and absorbed the media’s attention for the time being, but it’s arguable that the company presented something that makes anyone “think different” about how AR and VR should be approached.

The Vision Pro is yet another expensive piece of experience isolating hardware that other headsets such as the HoloLens and earlier versions of the Meta Quest have been, and based on the limitless possibilities Apple could have strived for in its own polished fantasy demos, it stuck to what almost every other headset has shown.

Another crack surfacing in Apple’s Reality Distortion field around the Vision Pro is the fact that it’s being sold as a mixed reality producing spatial computing when in fact it’s just VR with video pass through.

If someone puts a thumb over the cameras on a HoloLens, the AR elements disappear but the users can still see the outside world, however, if someone covers the cameras on the Vision Pro, the user is essentially blind.

Most AR/VR headsets attempt to specialize in single reality display such as the Meta Quest Pro going for mostly immersive VR or the HoloLens (despite Microsoft’s marketing of Mixed Reality) aiming for AR. What Apple showed off during its Vision Pro announcement was mostly VR experiences with a digital pass through and I think the company is going to find itself painted into a corner trying to evolve it.

A user of the Vision Pro isn’t actually viewing the real outside world with computer-generated images applied to it, but a video reproduction of it, granted with an almost imperceptible timing difference. When it comes to the Facetime demo Apple showcased during the presentation, it was yet another digital interpretation of the user’s face being portrayed in video.

In addition, what people interacting with a person wearing the Vision Pro in close quarters are seeing is, once again, a video representation of their eyes. In effect, Apple’s Vision Pro is a fully immersive VR headset with the ability to run a two-way video of processed imagery. I point this out because the belief and even misconception of the Vision Pro is that it’s the first step in a journey towards much sleeker AR glasses from the company, when in reality, Apple is leaning hard on VR and will eventually have to abandon some of the standout technological features of the headset to pivot to true AR.

As impressive as the digital crown is on the Vision Pro, it serves no purpose in an AR environment and for VR experiences, it’s equally useless. The digital crown on the Vision Pro anchors the VR aspect of the headset and for Apple to make moves towards actual AR experiences, it will most likely have to go.

The presumably expensive outer display will also have to be ditched at some point as its only purpose is to obfuscate the reality that the Vision Pro is actually just a VR headset by relaying those digitally imposed eyes on the front making it seem like there is view into the user’s eyesight.

Unlike the HoloLens, which uses transparent lenses to create its AR experiences, the Vision Pro uses cameras to capture the outside world and relay into the lenses on the headset, essentially the difference between wearing tinted glasses outside and holding up your phone to record, and while the outcome is the same, one is far less complicated and expensive to reproduce.

The comparison here is that if Microsoft ever revives its HoloLens development, the idea of shrinking the tech around the transparent lenses is a much closer aim to AR glasses than transitioning a fully immersive VR headset with video capture.

At the end of the day, Apple produced a really nice and technologically advanced headset that’s ballooning the device’s reality distortion field, but it’s the next six months and the next six years that will continuously toss barbs at the field to see if Apple can overcome the same roadblocks every other headset has tripped over.

It’ll be over the next six months to the next six years, to see if Apple can split its attention from making fetishized hardware to speaking with national and international regulatory bodies to create an AR database accessible via the cloud to legally populate relevant data about the outside world.

We’ll have to see what implications of its facial recognition software that identifies and records the faces of people interacting with users of the Vision Pro becomes fodder for governmental debate and legislation.

We’ll also have to see if developers can develop their way out of a cycle of redundancy when it comes to headset experiences as well. Apple touted that the Vision Pro will have hundreds of apps accessible on day one as it considers apps designed for the 2D smartphone screen to be acceptable experiences for a VR/AR headset.

Yes, scrolling Instagram with your eyes will be nice, it’s not inherently different than using your finger or a mouse, the same goes for all of the apps designed with touch and haptic feedback from a smartphone that will be ported to the Vision Pro’s app catalog. Apple seems to be making the same mistake Windows did thinking it could just port historically mouse and keyboard apps to tablets and call it a volume win.

Experiences should feel native for the device otherwise users will simply use the most efficient device for seemingly redundant experiences, and often that means skipping the heavy face computer with limited mobility, battery life, and use cases.

Admittedly, the Vision Pro won’t drop into developer hands until next year, and a lot can change in six months, but from a 30,000-foot view, Apple made a VR headset that does video passthrough for to produce very similar AR experiences offered by other devices. At this moment, it’s unclear how Apple’s Vision Pro will go from oversized ski goggles to the improbable standard of AR glasses every other headset has been held to.