Microsoft Research wants to make face-swapping deepfakes easier, but also make forged face swapping images easier to detect

2 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team Read more

A pair of research papers jointly published by Microsoft Research and Peking University purpose a new face swapping AI in addition to a new facial forgery detector (via VentureBeat). Both tools offer high-quality results relative to other services available, all while maintaining similar performance and can do so with substantially less data.

FaceShifter is the proposed face-swapping solution. Like its alternatives such as Reflect and FaceSwap, the newer tool is able to account for many variations including color, lighting, facial expression and other attributes. What sets it apart, however, is that the tool can also account for posture and angular differences, according to the authors of the published paper.

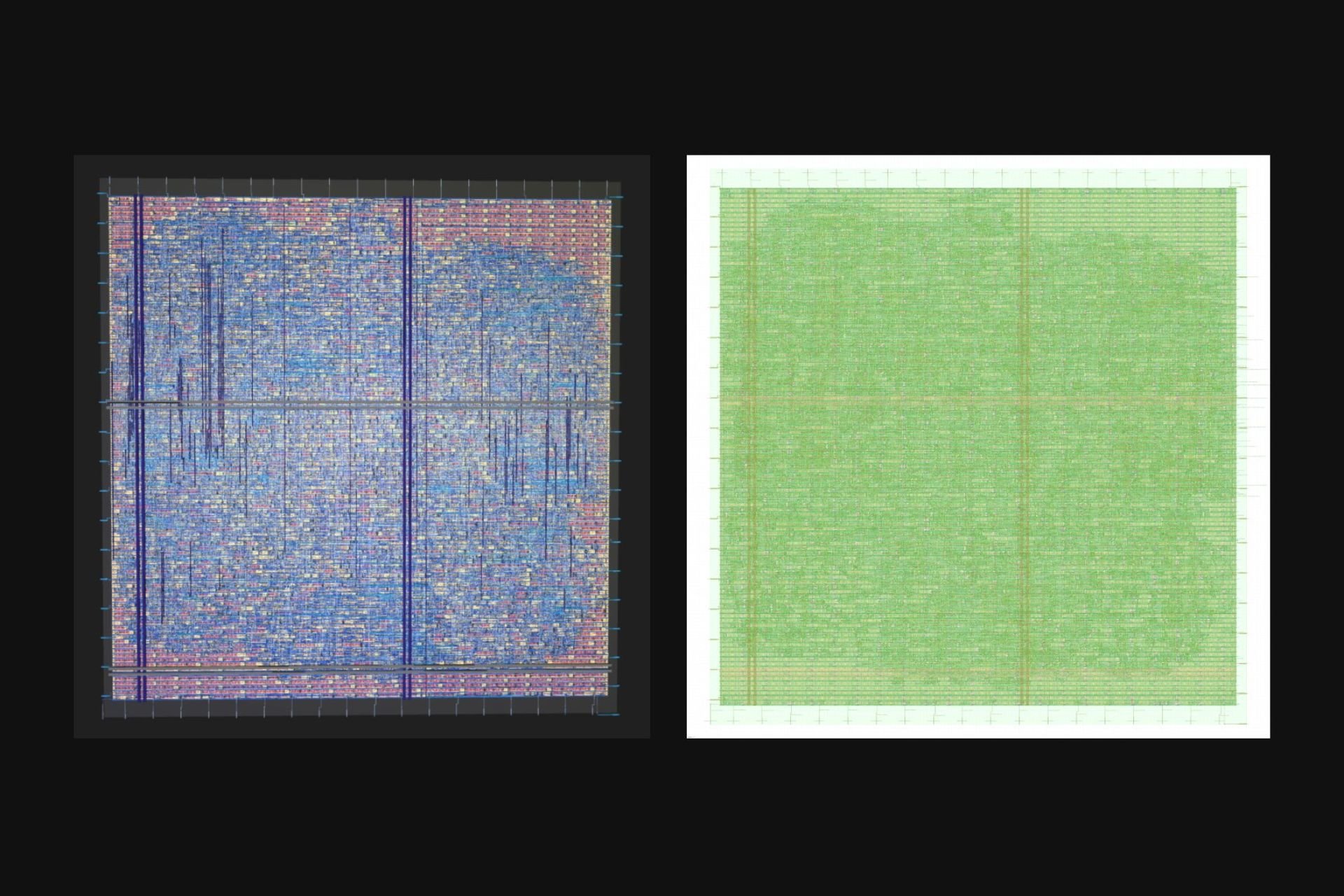

Faceshifter takes advantage of a generative adversarial network, or GAN, in which a generator ties to fool with a discriminator that the image is authentic. In contrast to existing tools, it also doesn’t need foreknowledge of the methods used in face-swapping or human supervision, instead creating grayscale images to determine if it can be decomposed into the two blended images from various sources.

In addition to the FaceShifter, the purposed Face X-Ray is a tool that detects counterfeit facial images. Face swaps and image manipulations are somewhat common for malicious purposes, which is what lead to the research and creation of the new AI tool for detecting fake images. The tool doesn’t use any previous knowledge on image manipulation, and was trained using FaceForensics++, a large video catalog with over a thousand original clips.

As it turn out, the tool was able to distinguish previously unseen images, and was able to reliably predict bending regions, although the team noted that this also means that it might not be able to detect wholly synthetic image and that it could potentially be defeated by adversarial samples.

What do you think about these new face-swapping and forgery detector tools? Feel free to leave a comment down below.